The Essential Artificial Intelligence Glossary for Marketers (90+ Terms)

AI has transformed the business landscape, and while everyone can benefit from it, not everyone has the technological background to understand exactly how it works and what it does.

This piece is an AI glossary of all the essential terms and definitions you need to know to fully understand the technology you use.

Table of Contents

Why Artificial Intelligence Matters for Marketers

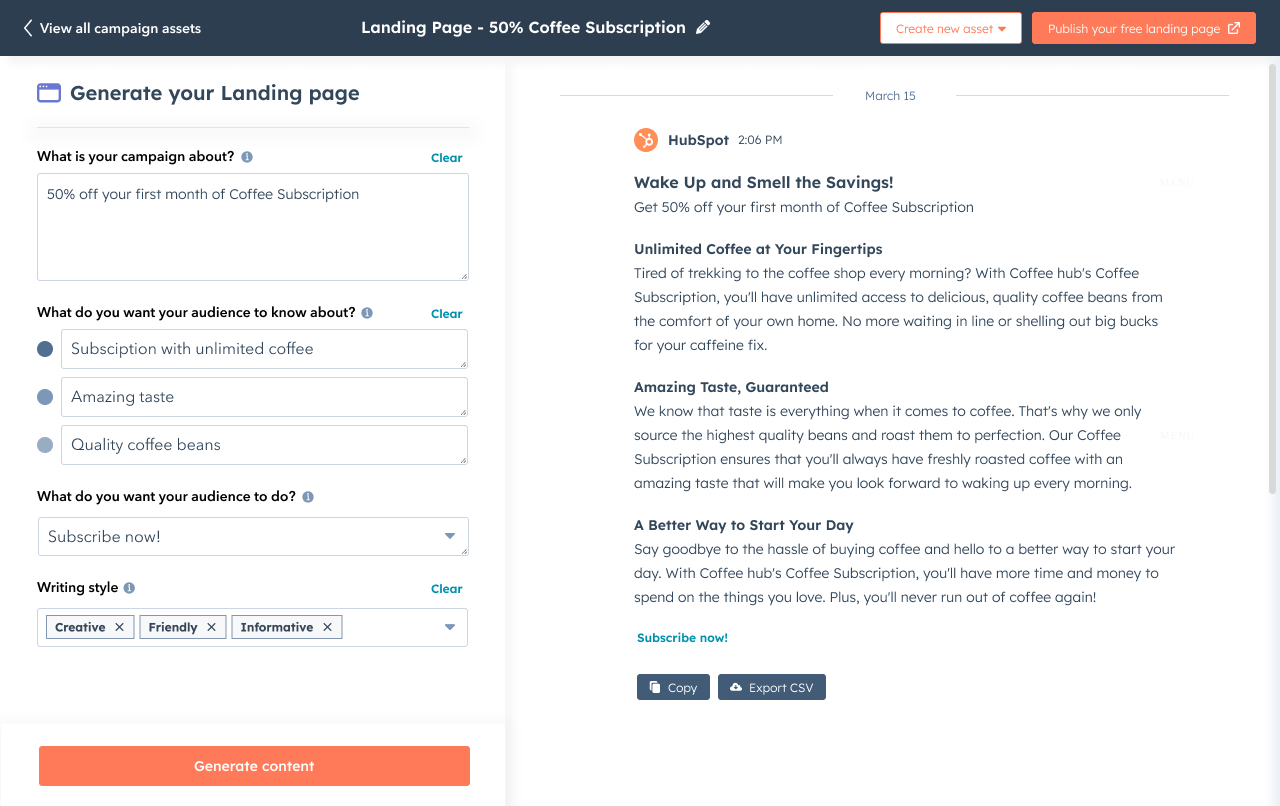

Artificial intelligence is important for marketers because it can support crucial parts of the marketing process, like SEO research, campaign personalization, data analysis, and content creation. For example, a tool like Campaign Assistant uses text inputs (also called prompts) to help marketers seamlessly and quickly create copy for landing pages, emails, and ad campaigns.

Get Started With Campaign Assistant

Get Started With Campaign Assistant

And, when AI assists with key marketing tasks, it also saves you time that you can redistribute to focusing on optimizing your campaigns.

Read through the AI glossary below to learn more about how the AI tools you use work.

Artificial Intelligence Terms Marketers Need to Know

A

- Algorithm – An algorithm is a formula that represents a relationship between variables. In machine learning, models use algorithms to make predictions from the data it analyzes. Social media networks have algorithms that use previous behavior on the platform to show them content it predicts they’re most likely to enjoy.

- Artificial intelligence – Artificial intelligence is an area of computer science where machines complete tasks that would require intelligence if done by a human, like learning, seeing, talking, reasoning, or problem-solving.

- Artificial General Intelligence (AGI) – AGI is the second of three stages of AI, where systems have intelligence that allows them to learn and adapt to new situations, think abstractly, and solve problems on par with human intelligence. We are currently in the first stage of AI, and AGI is mainly theoretical. Also called general intelligence.

- AI analytics – AI analytics is a type of analysis that uses machine learning to process large amounts of data and identify patterns, trends, and relationships. It doesn’t require human input, and businesses can use the results to make data-driven decisions and remain competitive.

- AI assistant – An AI assistant, usually a chatbot or virtual assistant, uses artificial intelligence to understand and respond to human requests. It can schedule meetings, answer questions, and automate repetitive tasks to save time and improve efficiency.

- AI bias – AI bias is the idea that machine learning systems can be biased because of biased training data, which leads them to produce outputs that perpetuate and reinforce stereotypes harmful to specific communities.

- AI chatbot – An AI chatbot is a program that uses machine learning (ML) and natural language processing (NLP) to have human-like conversations. AI chatbots are popular on websites, apps, or social media sites to handle customer conversations.

- AI ethics – AI ethics refers to humans needing to consider the implications of using AI and ensuring its use in a way that is harmless to users and anyone that interacts with it. As AI is a growing field, AI ethics is constantly evolving and being researched.

- Anthropomorphize – Anthropomorphization is when humans assign qualities to AI systems because of how they replicate human abilities. It’s one of the reasons why people think AI is sentient, but experts and scientists say that any assumption of human qualities is because models do what they’re programmed to do. Enzo Pasquale Scilingo, a bioengineer at the Research Center E. Piaggio at the University of Pisa in Italy, says, “We attribute characteristics to machines that they do not and cannot have…As intelligent as they are, they cannot feel emotions. They are programmed to be believable.”

- Augmented reality (AR) – Augmented reality is layering virtual elements onto real-world scenes, letting users exist in their current space but benefit from interactive, augmented elements. Snapchat filters are an example of AR.

- Autonomous machine – An autonomous machine can learn, reason, and make decisions with the data it has and without human intervention. Self-driving cars are autonomous machines.

- Auto-complete – Auto-complete analyzes input, text, or voice and suggests possible following words or phrases based on the patterns it learns from analyzing historical data and an individual’s language pattern and context.

- Auto classification – Auto classification is categorizing and tagging data based on unique categories, making it easier to organize, manage, and retrieve information.

B

- Bard – Bard is Google’s conversational AI that runs on LaMDA (language model for dialogue applications). It’s similar to ChatGPT but has added functionality to pull information from the internet.

- Bayesian network – Bayesian network is a probability model that estimates the probability of an event occurring. AI can help create Bayesian networks as it can evaluate data at a significant speed.

- BERT – Bidirectional Encoder Representations from Transformers (BERT) is Google’s deep learning model designed explicitly for natural language processing tasks like answering questions, analyzing sentiment, and translation.

- Bing Search – Bing Search is Microsoft’s machine learning search tool that uses neural networks to understand prompt inputs, surface the most relevant results, and bring answers. It can also produce new text-based content and images.

- Bots – Bots, often called chatbots, are text-based programs that humans use to automate tasks or seek information. Bots can be rule-based and only able to complete pre-defined tasks or have more complex functionality.

C

- Chatbot – A chatbot simulates human conversations online by answering common questions or routing people to the right resources to solve their needs.

- ChatGPT – ChatGPT is a conversational AI that runs on GPT, a language model that uses natural language processing to understand text prompts, answer questions or generate content.

- Cognitive Science – Cognitive science studies the mind and its processes. Artificial intelligence is an application of cognitive science that applies systems of the mind (like neural networks) to machine models.

- Composite AI – Composite AI combines different AI technologies and techniques and makes them work together to solve problems and handle complex tasks.

- Computer vision – Computer vision is deep learning models analyzing, interpreting, and understanding visual information, namely images and videos. Reverse image search is an example of computer vision.

- Conversational AI – Conversational AI is technology that mimics a human conversational style and can have logical and accurate conversations. It uses natural language processing (NLP) and natural language generation (NLG) to gather context and respond in a relevant way.

D

- Data mining – Data mining is discovering patterns, relationships, and trends to draw insights from large data sets. It’s accelerated with machine learning algorithms that can complete the process at a much faster rate. A recommendation algorithm is data mining, analyzing a significant amount of user data to give recommendations.

- Deep learning – Deep learning is an AI process where computers learn from data and use understanding to create neural networks that replicate the human brain.

- DALL-E – DALL-E is OpenAI’s generative system that uses detailed natural language prompts to create images and art.

E

- Emergent behavior – Emergent behavior is when AI completes tasks or develops skills it wasn’t built or programmed to do.

- Entity annotation – Entity annotation is a natural language processing technique of classifying data into predefined categories (like an individual’s name) to make it easier to analyze, understand, and organize information. Also called entity extraction.

- Expert systems – An expert system replicates human experts’ decision-making abilities in a specific field.

- Explainable AI (XAI) – Explainable AI can help humans understand how it works and why it makes decisions or predictions. Understanding the machines we use increases trust, accountability, and the ethics of using AI outputs in day-to-day life.

F

- Feature engineering – Feature engineering is selecting specific features from raw data so a system knows what to learn when training.

- Feature extraction – Feature extraction is when a machine breaks input down into specific features and uses those features to classify and understand it. In image recognition, a specific element of an image can be defined as a feature, and the feature is used to predict the likelihood of what an entire image might be.

G

- Generative AI – Generative AI processes prompts by identifying patterns and using those patterns to produce an output that aligns with its initial learning, like an answer to a question, text, images, audio, video, code, and even synthetic data.

- General Intelligence – General intelligence is the second of the three stages of AI. See Artificial General Intelligence (AGI).

- GPT – Generative Pre-trained Transformer (GPT) is OpenAI’s language model that is trained on large amounts of data and, from its training, can understand natural language inputs to answer questions, have human-like conversations, and produce content. GPT-4 is the latest and most advanced iteration of GPT.

H

- Hallucination – Hallucination is when AI produces factually incorrect outputs and information.

I

- Image recognition – Image recognition is a machine learning process that uses algorithms to identify objects, people, or places in images and videos. Google Lens is an example of image recognition. Also called Image classification.

L

- Large Language Model (LLM) – A LLM is trained on a large historical data set and uses its knowledge to complete a specific task(s). GPT and LamDA are language models.

- Language Model for Dialogue Applications (LamDA) – LamDA is Google’s large language model that can have realistic conversations with humans, give accurate responses, and produce new content.

- Limited memory AI – Limited memory AI is the second of four types of AI, and these systems are only able to complete tasks based on a small amount of stored data and can’t extend their abilities beyond that. It also doesn’t retain any previous memories to learn from when completing future tasks.

M

- Machine Learning – Machine learning is a type of artificial intelligence where machines use data and algorithms to make decisions and predictions and complete tasks. Machine learning systems get better and more accurate over time as it has new experiences and data to learn from.

- Midjourney – Midjourney is a generative AI model that can produce new images from natural language prompts.

N

- Narrow AI – Narrow AI is a system designed to perform a specific task or set of tasks but can’t adapt to do anything beyond that. It’s the first of three stages of AI, and most systems today are narrow AI. Also called weak AI and narrow intelligence.

- Natural Language Processing (NLP) – Natural language processing is a machine’s ability to understand and interpret language (both spoken and written) and use the understanding to have conversational experiences. Spell check is a basic example, and language models are a more advanced form. Also called natural language understanding (NLU).

- Natural Language Generation (NLG) – Natural language generation is when a model processes language and uses its understanding to accurately complete a task, whether it’s answering a question or creating an outline for an essay.

- Natural Language Query (NLQ) – A natural language query is a written input that appears as it would if said aloud, which means no special characters or syntax.

- Neutral networks – In relation to AI, a neural network is a computerized replication of a human brain’s neural network that lets a system develop knowledge and make predictions in a similar fashion to the human brain.

O

- OpenAI – OpenAI is an artificial intelligence research laboratory that created GPT, DALL-E, and other AI tools.

P

- Pattern recognition – Pattern recognition is a machine’s ability to recognize patterns in data based on the algorithms it developed during training.

- Predictive analytics – In AI, predictive analytics uses algorithms to predict the likelihood of a future occurrence based on patterns in historical data.

- Prompts – A prompt is a natural language input given to a model. Prompts can be questions, tasks to complete, or a description of the type of content a user wants AI to create. A simple definition is an instruction that prompts a model to do something.

- Prompt engineering – Prompt engineering is figuring out the right words and phrases that help generative systems align with the input’s exact intent. So, for example, figuring out the best words to use to have AI write exactly what you’re looking for.

R

- Reactive machines – Reactive machines are the second of four types of AI, and these systems have no memory or understanding of context and can only complete a specific task. Rule-based chatbots are reactive machines. Also called reactive AI.

- Reinforcement learning – Reinforcement learning is a machine learning process where systems learn and correct themselves through a trial and error.

- Responsible AI – Responsible AI is deploying AI in ways that are ethical and with good intentions to not perpetuate biases and harmful stereotypes.

- Robotics – Robotics is the design of robots that can perform tasks and take action because of programmed AI without human guidance.

S

- Self-aware AI – Self-aware AI is the fourth of the four types of AI. It’s a further evolution of theory of mind AI, and machines can understand human emotions and have their own emotions, needs, and beliefs. Sentient AI is self-aware AI and, at the moment, is a theoretical concept.

- Semantic analysis – Semantic analysis is machines drawing meaning from information inputs. It’s similar to natural language processing but can account for more complex factors, like cultural context.

- Sentiment analysis – Sentiment analysis is the process of identifying emotional signals and queues from text to predict a statement’s overall sentiment.

- Sentient AI – Sentient AI feels and has experiences at the same level as humans. This AI is emotionally intelligent and can perceive the world and turn perceptions into feelings.

- Structured data – Structured data is organized data that machine learning algorithms can easily understand.

- Supervised learning – Supervised learning is when humans oversee the machine learning process and give specific instructions for learning and expected outcomes.

- Artificial superintelligence (ASI) – ASI is the third and most advanced stage of AI, where systems can solve complex problems and make decisions beyond the abilities of human intelligence. It’s a hot topic for debate as its potential and risks are purely speculative. Also called Super AI, Strong AI, and superintelligence.

- Stable diffusion – Stable diffusion is a generative model that creates images from detailed text descriptions (prompts).

- Singularity – Singularity is a hypothetical future in artificial intelligence where systems experience uncontrolled growth and take actions that can significantly impact human life. Closely related to superintelligence and sentient AI.

T

- Theory of mind AI – Theory of mind AI is the third evolution of the four types of AI, and it’s an advanced class of technology that can understand the mental states of humans and use that knowledge to have real interactions. For example, a system that understands the emotions of a disgruntled customer can use that knowledge to respond accordingly.

- Token – A token is a single piece of data that a language model uses to make sense of input and make a prediction. A token can be a single word, characters in a string of words, subwords, or single features within visual information.

- Training data – Training data is what a machine is given to learn from to complete its future tasks.

- Transfer learning – Transfer learning is a machine learning technique where a pre-trained model is used as the starting point for a new task.

- Turing Test – Alan Turing invented the Turing Test in 1950 to measure if a machine’s level of intelligence allowed it to perform in a way that is indistinguishable from human performance. It was originally called the imitation game.

U

- Unsupervised learning – Unsupervised learning is when a system is left to find patterns and draw conclusions and insights from data without human input. It’s the opposite of supervised learning.

V

- Virtual reality (VR) – VR is any software that immerses users in a three-dimensional, interactive virtual environment using a sensory device.

W

- Weak AI – see Narrow AI.

![]()